As you might have read in this blog, I own a Neo FreeRunner since one year ago. I have used it far less than I should have, mostly because it’s a wonderful toy, but a lousy phone. The hardware is fine, although externally quite a bit less sexy than other smartphones such as the iPhone. The software, however, is not very mature. Being as open as it is, different Linux-centric distros have been developed for it, but I haven’t been able to find one that converts the Neo into an everyday use phone.

But let’s cut the rant, and stick to the issue: that the Neo is a nice playground for a computer geek. Following my desire to play, I installed Debian on it. Next, I decided to make some GUI programs for it, such a screen locker. I found Zedlock, a program written in Python, using GTK+ and Cairo. Basically, Zedlock paints a lock on the screen, and refuses to disappear until you paint a big “Z” on the screen with your finger. Well, that’s what it’s supposed to do, because the 0.1 version available at the Openmoko wiki is not functional. However, with Zedlock I found just what I wanted: a piece of software capable of doing really cool graphical things on the screen of my Neo, while being simple enough for me to understand.

Using Zedlock as a base, I am starting to have real fun programming GUIs, but a problem has quickly arisen: their response is slow. My programs, as all GUIs, draw an image on the screen, and react to tapping in certain places (that is, buttons) by doing things that require that the image on the screen be modified and repainted. This repainting, done as in Zedlock, is too slow. To speed things up, I googled the issue, and found a StackOverflow question that suggested the obvious route: to cache the images. Let’s see how I did it, and how it turned out.

Material

You can download the three Python scripts, plus two sample PNGs, from: http://isilanes.org/pub/blog/pygtk/.

Version 0

You can download this program here. Its main loop follows:

C = Canvas()

# Main window:

C.win = gtk.Window()

C.win.set_default_size(C.width, C.height)

# Drawing area:

C.canvas = gtk.DrawingArea()

C.win.add(C.canvas)

C.canvas.connect('expose_event', C.expose_win)

C.regenerate_base()

# Repeat drawing of bg:

try:

C.times = int(sys.argv[1])

except:

C.times = 1

gobject.idle_add(C.regenerate_base)

C.win.show_all()

# Main loop:

gtk.main()

As you can see, it generates a GTK+ window (line 04), with a DrawingArea inside (line 08), and then executes the regenerate_base() function every time the main loop is idle (line 20). Canvas() is a class whose structure is not relevant for the discussion here. It basically holds all variables and relevant functions. The regenerate_base() function follows:

def regenerate_base(self):

# Base Cairo Destination surface:

self.DestSurf = cairo.ImageSurface(cairo.FORMAT_ARGB32, self.width, self.height)

self.target = cairo.Context(self.DestSurf)

# Background:

if self.bg == 'bg1.png':

self.bg = 'bg2.png'

else:

self.bg = 'bg1.png'

self.i += 1

image = cairo.ImageSurface.create_from_png(self.bg)

buffer_surf = cairo.ImageSurface(cairo.FORMAT_ARGB32, self.width, self.height)

buffer = cairo.Context(buffer_surf)

buffer.set_source_surface(image, 0,0)

buffer.paint()

self.target.set_source_surface(buffer_surf, 0, 0)

self.target.paint()

# Redraw interface:

self.win.queue_draw()

if self.i > self.times:

sys.exit()

return True

As you can see, it paints the whole window with a PNG file (lines 15-25), choosing alternately bg1.png and bg2.png each time it is called (lines 07-11). Since the re-painting is done every time the main event loop is idle, it just means that images are painted to screen as fast as possible. After a given amount of re-paintings, the script exits.

You can run the code above by placing two suitable PNGs (480×640 pixels) in the same directory as the above code. If an integer argument is given to the script, it re-paints the window that many times, then exits (default, just once). You can time this script by executing, e.g.:

% /usr/bin/time -f %e ./p0.py 1000

Version 1

You can download this version here.

The first difference with p1.py is that the regenerate_base() function has been separated into the first part (generate_base()), which is executed only once at program startup (see below), and all the rest, which is executed every time the background is changed.

def generate_base(self):

# Base Cairo Destination surface:

self.DestSurf = cairo.ImageSurface(cairo.FORMAT_ARGB32, self.width, self.height)

self.target = cairo.Context(self.DestSurf)

The main difference, though, is that two new functions are introduced:

def mk_iface(self):

if not self.bg in self.buffers:

self.buffers[self.bg] = self.generate_buffer(self.bg)

self.target.set_source_surface(self.buffers[self.bg], 0, 0)

self.target.paint()

def generate_buffer(self, fn):

image = cairo.ImageSurface.create_from_png(fn)

buffer_surf = cairo.ImageSurface(cairo.FORMAT_ARGB32, self.width, self.height)

buffer = cairo.Context(buffer_surf)

buffer.set_source_surface(image, 0,0)

buffer.paint()

# Return buffer surface:

return buffer_surf

The function mk_iface() is called within regenerate_base(), and draws the background. However, the actual generation of the background image (the Cairo surface) is done in the second function, generate_buffer(), and only happens once per each background (i.e., twice in total), because mk_iface() reuses previously generated (and cached) surfaces.

Version 2

You can download this version here.

The difference with Revision 1 is that I eliminated some apparently redundant procedures for creating surfaces upon surfaces. As a result, the generate_base() function disappears again. I get rid of the DestSurf and C.target variables, so the mk_iface() and expose_win() functions end up as follows:

def mk_iface(self):

if not self.bg in self.buffers:

self.buffers[self.bg] = self.generate_buffer(self.bg)

buffer = self.canvas.window.cairo_create()

buffer.set_source_surface(self.buffers[self.bg],0,0)

buffer.paint()

def expose_win(self, drawing_area, event):

nm = 'bg1.png'

if not nm in self.buffers:

self.buffers[nm] = self.generate_buffer(nm)

ctx = drawing_area.window.cairo_create()

ctx.set_source_surface(self.buffers[nm], 0, 0)

ctx.paint()

A side effect is that I can get also rid of the forced redraws of self.win.queue_draw().

Results

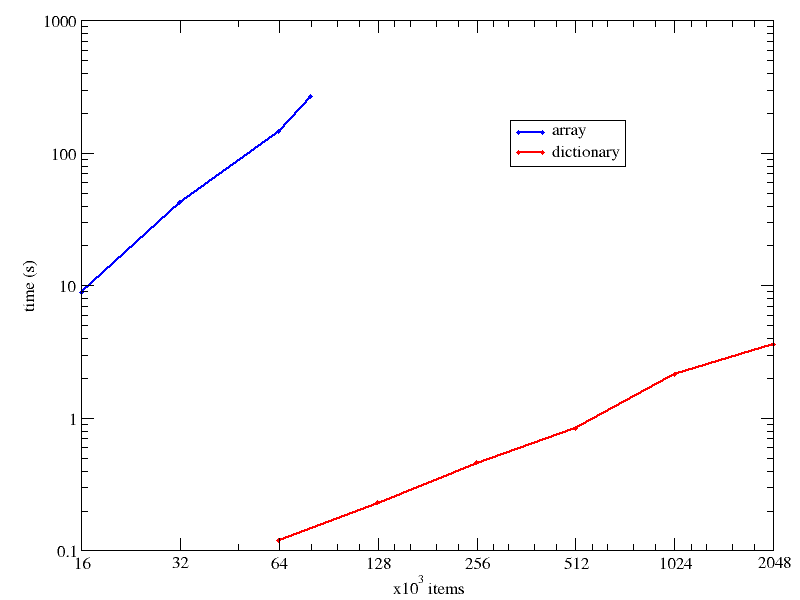

I have run the three versions above, varying the C.times variable, i.e., making a varying number of reprints. The command used (actually inside a script) would be something like the one mentioned above:

% /usr/bin/time -f %e ./p0.py 1000

The following table sumarizes the results for Flanders and Maude (see my computers), a desktop P4 and my Neo FreeRunner, respectively. All times in seconds.

| Flanders |

| Repaints |

Version 0 |

Version 1 |

Version 2 |

| 1 |

0.26 |

0.43 |

0.33 |

| 4 |

0.48 |

0.40 |

0.42 |

| 16 |

0.99 |

0.43 |

0.40 |

| 64 |

2.77 |

0.76 |

0.56 |

| 256 |

9.09 |

1.75 |

1.15 |

| 1024 |

37.03 |

6.26 |

3.44 |

| Maude |

| Repaints |

Version 0 |

Version 1 |

Version 2 |

| 1 |

4.17 |

4.70 |

5.22 |

| 4 |

8.16 |

6.35 |

6.41 |

| 16 |

21.58 |

14.17 |

12.28 |

| 64 |

75.14 |

44.43 |

35.76 |

| 256 |

288.11 |

165.58 |

129.56 |

| 512 |

561.78 |

336.58 |

254.73 |

Data in the tables above has been fitted to a linear equation, of the form t = A + B n, where n is the number of repaints. In that equation, parameter A would represent a startup time, whereas B represents the time taken by each repaint. The linear fits are quite good, and the values for the parameters are given in the following tables (units are milliseconds, and milliseconds/repaint):

| Flanders |

| Parameter |

Version 0 |

Version 1 |

Version 2 |

| A |

291 |

366 |

366 |

| B |

36 |

6 |

3 |

| Maude |

| Parameter |

Version 0 |

Version 1 |

Version 2 |

| A |

453 |

3218 |

4530 |

| B |

1092 |

648 |

487 |

Darn it! I have mixed feelings for the results. In the desktop computer (Flanders), the gains are huge, but hardly noticeable. Cacheing the images (Version 1) makes for a 6x speedup, whereas Version 2 gives another twofold increase in speed (a total of 12x speedup!). However, from a user’s point of view, a 36 ms refresh is just as immediate as a 6 ms refresh.

On the other hand, on the Neo, the gains are less spectacular: the total gain in speed for Version 2 is a mere 2x. Anyway, half-a-second repaints instead of one-second ones are noticeable, so there’s that.

And at least I had fun and learned in the process! :^)