Update: this script has been superseded by ChopZip

Introduction

I discovered the [[Lempel-Ziv-Markov chain algorithm|LZMA]] compression algorithm some time ago, and have been thrilled by its capacity since. It has higher compression ratios than even [[bzip2]], with a faster decompression time. However, although decompressing is fast, compressing is not: LZMA is even slower than bzip2. On the other hand, [[gzip]] remains blazing fast in comparison, while providing a decent level of compression.

More recently I have discovered the interesting pbzip2, which is a parallel implementation of bzip2. With the increasing popularity of multi-core processors (I have a quad-core at home myself), parallelizing the compression tools is a very good idea. pbzip2 performs really well, producing bzip2-compatible files with near-linear scaling with the number of CPUs.

LZMA being such a high performance compressor, I wondered if its speed could be boosted by using it in parallel. Although the [[Lempel-Ziv-Markov chain algorithm|Wikipedia article]] states that the algorithm can be parallelized, I found no such implementation in Ubuntu 9.04, where the utility provided by the lzma package is exclusively serial. Not finding one, I set myself to produce it.

About plzma.py

Any compression can be parallelized as follows:

- Split the original file into as many pieces as CPU cores available

- Compress (simultaneously) all the pieces

- Create a single file by joining all the compressed pieces, and call the result “the compressed file”

In a Linux environment, these three tasks can be carried out easily by split, lzma itself, and tar, respectively. I just made a [[Python (programming language)|Python]] script to automate these tasks, called it plzma.py, and put it in my web site for anyone to download (it’s GPLed). Please notice that plzma.py has been superseded by chopzip, starting with revision 12, whereas latest plzma is revision 6.

I must remark that, while pbzip2 generates bzip2-compatible compressed files, that is not the case with plzma. The products of plzma compression must be decompressed with plzma as well. The actual format of a plzma file is just a TAR file containing as many LZMA-compressed chunks as CPUs used for compression. These chunks, once decompressed individually, can be concatenated (with the cat command) to form the original file.

Benchmarks

What review of compression tools lacks benchmarks? No matter how inaccurate or silly, none of them do. And neither does mine :^)

I used three (single) files as reference:

- molekel.tar – a 108 MB tar file of the (GPL) [[Molekel]] 5.0 source code

- usr.bin.tar – 309 MB tar file of the contens of my /usr/bin/ dir

- hackable.tar – a 782 MB tar file of the hackable:1 [[Debian]]-based distro for the [[Neo FreeRunner]]

The second case is intended as an example of binary file compression, whereas the other two are more of a “real-life” example. I didn’t test text-only files… I might in the future, but don’t expect the conclusions to change much. The testbed was my Frink desktop PC (Intel Q8200 quad-core).

The options for each tool were:

- gzip/bzip/pbzip2: compression level 6

- lzma/plzma: compression level 3

- pbzip2/plzma: 4 CPUs

Compressed size

The most important feature of a compressor is the size of the resulting file. After all, we used it in first place to save space. No matter how fast an algorithm is, if the resulting file is bigger than the original file I wouldn’t use it. Would you?

The graph below shows the compressed size ratio for compression of the three test files with each of the five tools considered. The compressed size ratio is defined as the compressed size divided by the original size for each file.

This test doesn’t surprise much: gzip is the least effective and LZMA the most one. The point to make here is that the parallel implementations perform as well or badly as their serial counterparts.

If you are unimpressed by the supposedly higher performance of bzip2 and LZMA over gzip, when in the picture all final sizes do not look very different, recall that gzip compressed molekel.tar ~ 3 times (to a 0.329 ratio), whereas LZMA compressed it ~ 4.3 times (to a 0.233 ratio). You could stuff 13 LZMAed files where only 9 gzipped ones fit (and just 3 uncompressed ones).

Compression time

However important the compressed size is, compression time is also an important subject. Actually, that’s the very issue I try to address parallelizing LZMA: to make it faster while keeping its high compression ratio.

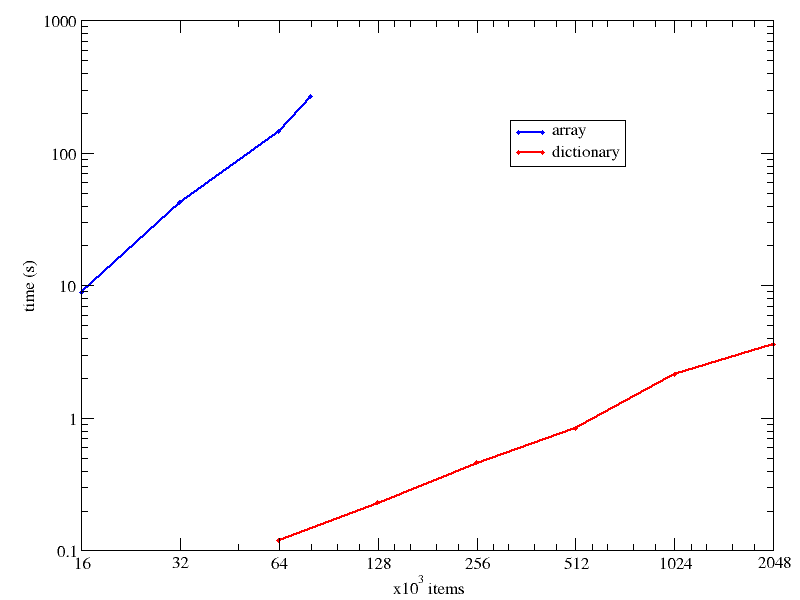

The graph below shows the normalized times for compression of the three test files with each of the five tools considered. The normalized time is taken as the total time divided by the time it took gzip to finish (an arbitrary scale with t(gzip)=1.0).

Roughly speaking, we could say that in my setting pbzip2 makes bzip2 as fast as gzip, and plzma makes LZMA as fast as serial bzip2.

The speedups for bzip2/pbzip2 and LZMA/plzma are given in the following table:

| File |

pbzip2 |

plzma |

| molekel.tar |

4.00 |

2.72 |

| usr.bin.tar |

3.61 |

3.38 |

| hackable.tar |

3.80 |

3.04 |

The performance of plzma is nowere near pbzip2, but I’d call it acceptable (wouldn’t I?, I’m the author!). There are two reasons I can think of to explain lower-than-linear scalability. The first one is the overhead imposed when cutting the file into pieces then assembling them back. The second one, maybe more important, is the disk performance. Maybe each core can compress each file independently, but the disk I/O for reading the chunks and writing them back compressed is done simultaneously on the same disk, which the four processes share.

Update: I think that a good deal of under-linearity comes from the fact that files of equal size will not be compressed in an equal time. Each chunk compression will take a slightly different time to complete, because some will be easier than others to compress. The program waits for the last compression to finish, so it’s as slow as the slowest one. It is also true that pieces of 1/N size might take more than 1/N time to complete, so the more chunks, the slower the compression in total (the opposite could also be true, though).

Decompression times

Usually we pay less attention to it, because it is much faster (and because we often compress things never to open them again, in which case we had better deleted them in first place… but I digress).

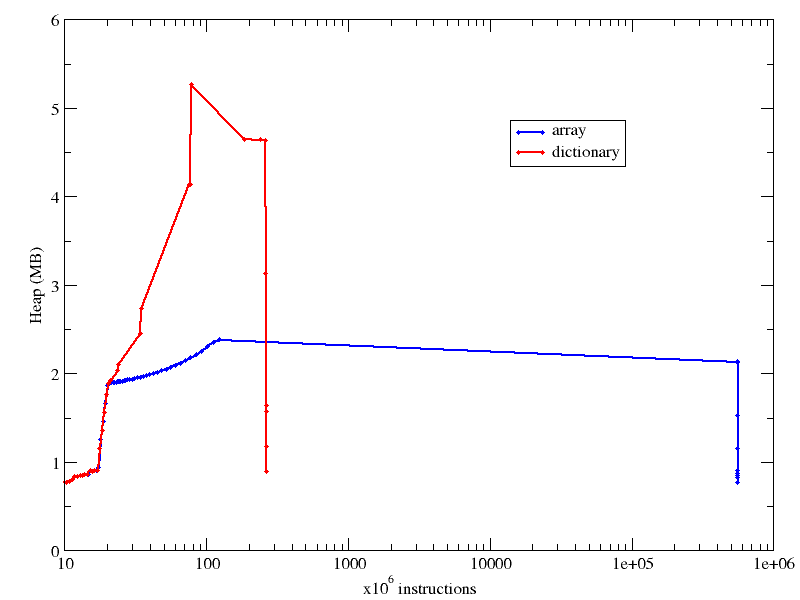

The following graph shows the decompression data equivalent to the compression times graph above.

The most noteworthy point is that pbzip2 decompresses pbzip2-compressed files faster than bzip2 does with bzip2-compressed files. That is, both compression and decompression benefit from the parallelization. However, for plzma that is not the case: decompression is slower than with the serial LZMA. This is due to two effects: first, the decompression part is still not parallelized in my script (it will soon be). This would lead to decompression speeds near to the serial LZMA. However, it is slower due to the second effect: the overhead caused by splitting and then joining.

Another result worth noting is that, although LZMA is much slower than even bzip2 to compress, the decompression is actually faster. This is not random. LZMA was designed with fast uncompression time in mind, so that it could be used in, e.g. software distribution, where a single person compresses the original data (however painstakingly), then the users can download the result (the smaller, the faster), and uncompress it to use it.

Conclusions

While there is room for improvement, plzma seems like a viable option to speed up general compression tasks where a high compression ratio (LZMA level) is desired.

I would like to stress the point that plzma files are not uncompressable with just LZMA. If you don’t use plzma to decompress, you can follow the these steps:

% tar -xf file.plz

% lzma -d file.0[1-4].lz

% cat file.0[1-4] > file

% rm file.0[1-4] file.plz